Maxar’s proprietary high-definition (HD) technology enhances the visual clarity of the company’s high-resolution satellite imagery. HD products are noticeably sharper, making information easier to understand and interpret than those in native resolution. These visual improvements expand customers’ capabilities with satellite imagery as fine details become distinguishable. Features of all sizes and shapes are more defined: edges of buildings, architectural design details, road markings and vehicles—and the list goes on. The image below shows an example of Maxar’s HD enhancement in image quality, where this improvement is easy to see, for example, on solar panels or car windshields.

The Maxar image on the left shows a residential street as natively acquired by WorldView-3 at 31 cm resolution. The image on the right is the same image processed with HD. Lines on the solar panels are sharper and small details like car windshields and the edges of vegetation are also clearer in the HD image.

While improvements are apparent in a qualitative sense, the benefits of HD are more difficult to provide quantitatively. How do we go from qualitative to quantitative metrics so we can measure how much HD has improved interpretability? In this context, we used machine learning (ML) applications to provide objective data. These metrics were calculated by comparing the results of models trained on either native or HD-resolution imagery. Specifically, one model was trained and tested on native-resolution imagery, while a second model was trained and tested on the same images after HD processing.

Results show that models trained on HD images outperformed models trained on native images by detecting features of interest with greater accuracy across variable and real-world conditions. The HD model demonstrated more than 6% improvement in F1 score over the native-resolution model across repeated tests. Not only does 6% mean a noticeable improvement for ML applications, but this metric also translates to several thousand working hours saved when tasked with accurately identifying solar panels.

The use case

When determining the use case for this study, we focused on object detection subjects beneficial to global initiatives. Particular attention was given to features valuable in supporting the United Nations Sustainable Development Goals (SDGs). This led to a couple of questions: What features can be seen more clearly with HD imagery than at native resolution, and which features provide valuable insight on current interests? Answer: solar panels.

Solar power systems derive affordable, reliable, sustainable and clean energy from the sun. Understanding the number and distribution of solar panels is essential for evaluating the potential for clean energy output in a region, valuating infrastructure for insurance purposes and assessing energy production in an area.

With this use case, we can address various SDGs by quantifying the growth of renewable infrastructure, preparing road maps for universal energy access, providing resources for national climate adaptation plans and quantifying essential information supporting climate efforts. With nearly 760 million people lacking access to electricity [1], supporting efforts to quantify solar panel distributions can be an important element in sustainable energy worldwide.

In addition, solar panels can be difficult to reliably detect using ML because of intrapanel variability, orientation and angle of panels and variation in materials used for panel construction. Because of the economic and cultural importance of solar energy, as well as the challenge of feature identification through satellite imagery, we determined that solar panel identification would be a great test for our HD imagery.

The dataset and model

The solar panel dataset consisted of about 100 sq km of Maxar WorldView-3 imagery of southern Germany, which was selected for the prevalence of solar panels in the region. Two versions of the imagery were created: one at native 30 cm resolution and one at 15 cm HD resolution. Other than HD processing, the images used for the models were identical.

When training an object detection model, the most common method is to use bounding boxes, which are rectangular or square boxes encompassing objects of interest. The dataset was created by labeling solar panels visible in the HD images with bounding boxes and corresponding coordinates. The bounding box coordinates were then transferred to the native-resolution images, establishing a training dataset for each image type.

For the task of detecting solar panels in satellite imagery, we selected an open-source object detection model named You Only Look Twice (YOLTv4) [2]. YOLTv4 has proved successful when detecting objects of different sizes (airplanes, boats and cars) in satellite imagery, and experiments can be reproduced easily thanks to the model’s open-source code. Two YOLTv4 models were trained—one with 30 cm native imagery and one with 15 cm HD imagery.

Training and evaluating the model

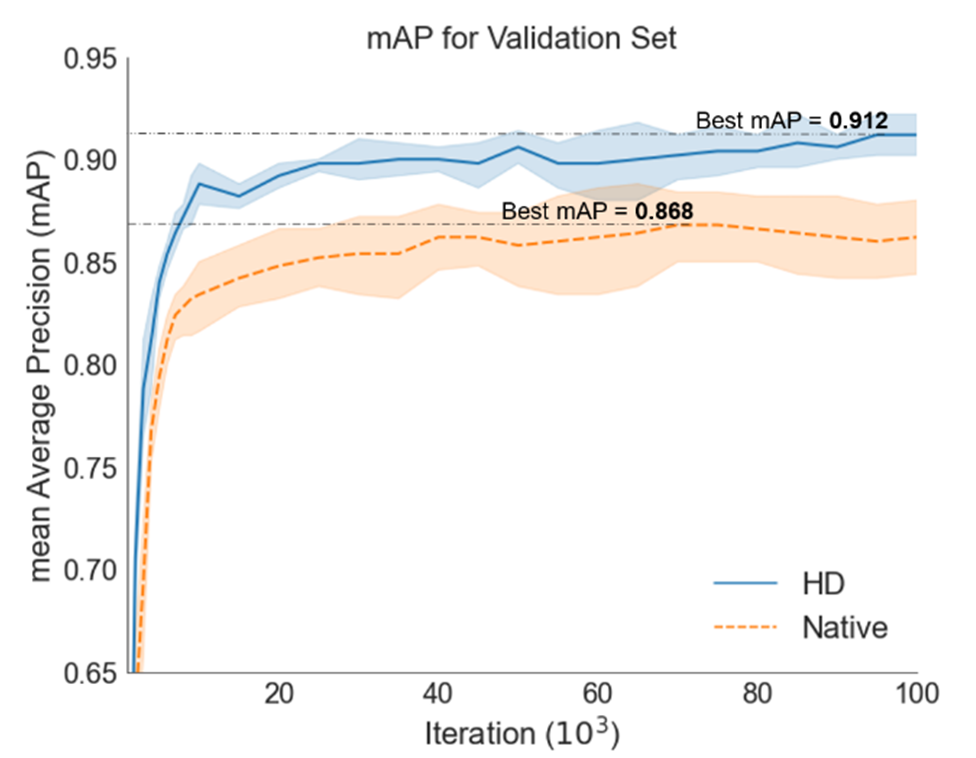

Each dataset was split into three groups with unique sets of images and object labels: training images, validation images and test images. Throughout model training, the training images taught the model how to recognize solar panels. The validation images, also used during training, provided an unbiased evaluation of the model while tuning hyperparameters. The metric calculated during this evaluation is mean average precision (mAP), measured as the average precision across all detection thresholds using the current model parameters and validation dataset. The chart below compares the mAP achieved by each model during training.

The mAP range for each model across repeated tests shows the HD model outperforms the native model throughout training. Comparing the best mAP values between both models illustrates the HD model’s superior ability to detect solar panels on an unbiased dataset, while the less variable range of mAP values during training indicates the HD model is more stable than the native model.

Each model, both native and HD, was trained until the model’s loss converged toward 0, indicating the model had attained the best fit for solar panel detection. For both models, this convergence happened at approximately 100,000 iterations across all repeated tests.

The models were then evaluated using the test images, a subset of the respective dataset withheld from training. Each model was trained and tested repeatedly to determine a range of model-performance metrics. The only differences between the repeated tests for each dataset were the randomized image augmentations (like random changes in color saturation, exposure and hue) implemented during training, which are necessary for the model to be able to generalize across various scenarios.

Analysis

Visually, the HD model outperformed the native-resolution model by detecting more solar panels and including fewer false positives across all iterations. The HD model detected more solar panels and was able to identify clusters of panels and panels of various sizes—issues that challenged the native model. These results show that, when detecting features like solar panels, models designed and tested with HD imagery offer better performance. Some visual examples are below.

Results showing the native-resolution model (right) detects some, but not all, of the solar panels in the image while the HD model (center) detects all of them.

This cluster of solar panels highlights the benefit of using HD imagery. The HD model (center) identifies all the solar panels missed by the native-resolution model (right). The HD model, while it performs better than the native model, did have one false positive detection at the top of the image.

To quantify the improvement achieved with HD imagery, the ground truth bounding boxes in the test images were used to calculate the F1 score, a metric used to measure a model’s ability to correctly identify features while avoiding both false positives and false negatives. The F1 score falls in a range between 0 and 1, with 1 being ideal when all objects are correctly detected without false positives.

For this study, correct detections were identified when the percent overlap (referred to as the intersection over union) between the ground truth bounding box and the predicted bounding box was at least 50%.

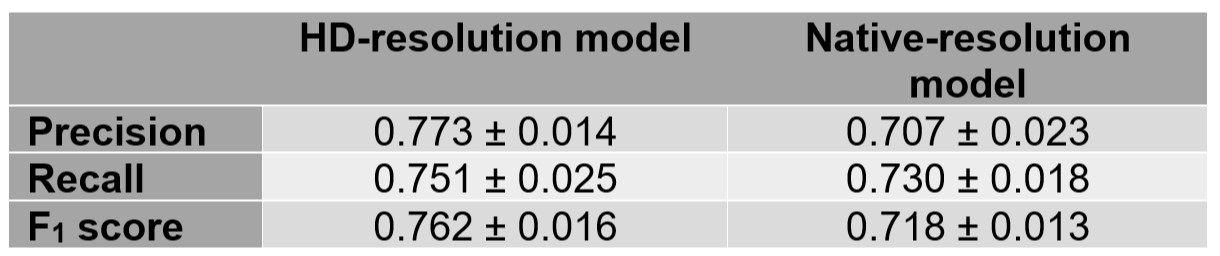

Table 1 below shows the HD model outperforms the native model across repeated tests. For this use case, simply changing the dataset resolution from native to HD improved model performance by more than 6%.

Compared to the native-resolution model, the HD-resolution model achieves better results for all three testing metrics: precision, recall and F1 score.

Conclusions

This exploratory study into the quantifiable metrics of HD indicates clear benefits in using Maxar’s HD imagery for ML applications. HD imagery provides better results, both qualitatively and quantitatively. Being able to meaningfully improve model performance through the inclusion of HD imagery can save substantial time and effort when fine-tuning model performance, rather than improving performance incrementally through a tedious, iterative process. Building these powerful models more quickly will also expedite our ability to detect objects at a finer scale and gather valuable information.

Notably, the HD model was able to correctly identify approximately 30 more solar panels and exclude 50 additional false positives in the testing area covering 25 sq km, as compared to the native model. By extension, if this analysis was conducted across all of Germany, a model trained on HD-resolution imagery would correctly identify more than 1 million more solar panels than a model trained with native-resolution imagery. Being able to detect considerably more solar panels through a trained ML model translates to saving several thousand working hours spent correctly identifying these objects both during data processing and in the field.

While we continue exploring our own capabilities with HD, we also expect HD to deliver meaningful data and better performance for our customers. We invite you to test your models using Maxar’s HD imagery and are interested in hearing from you about your results.

Test your models with a sample

Test your models on our native and HD imagery. We look forward to seeing your results.

Download a sample[1] United Nations. The Sustainable Development Goals Report; Jensen, L., Ed.; United Nations Publication Issued by the Department of Economic and Social Affairs; United Nations: New York, NY, USA, 2021; https://unstats.un.org/sdgs/report/2021/ , ISBN 978-92-1-101439-6.

[2] Adam Van Etten, You Only Look Twice: Rapid Multi-Scale Object Detection In Satellite Imagery, 2018, arXiv:1805.09512