For a while now the DigitalGlobe GBDX team has been running machine learning-based object detection at a significant, continental scale. Each time we add a new model to GBDX we kick the tires and do some comparisons to discover advantages or disadvantages over existing capabilities. We keep our customer use cases in mind, which typically boil down to “monitoring and change” or “pattern of life” activities. Some things we monitor with the models we have today include detecting changes or activity in a parking lot or port.With that in mind we wanted to do a “state of the union” or “state of the map” about the current state of machine learning on satellite imagery. Today, if we want to select an area of interest (AOI) and process every new image for a given detection, what would that look like? In the following examples, we look at some of our capabilities and what they offer, and what we’ll be able to provide in the future when we establish intra-day satellite collection revisits.

Counting Cars

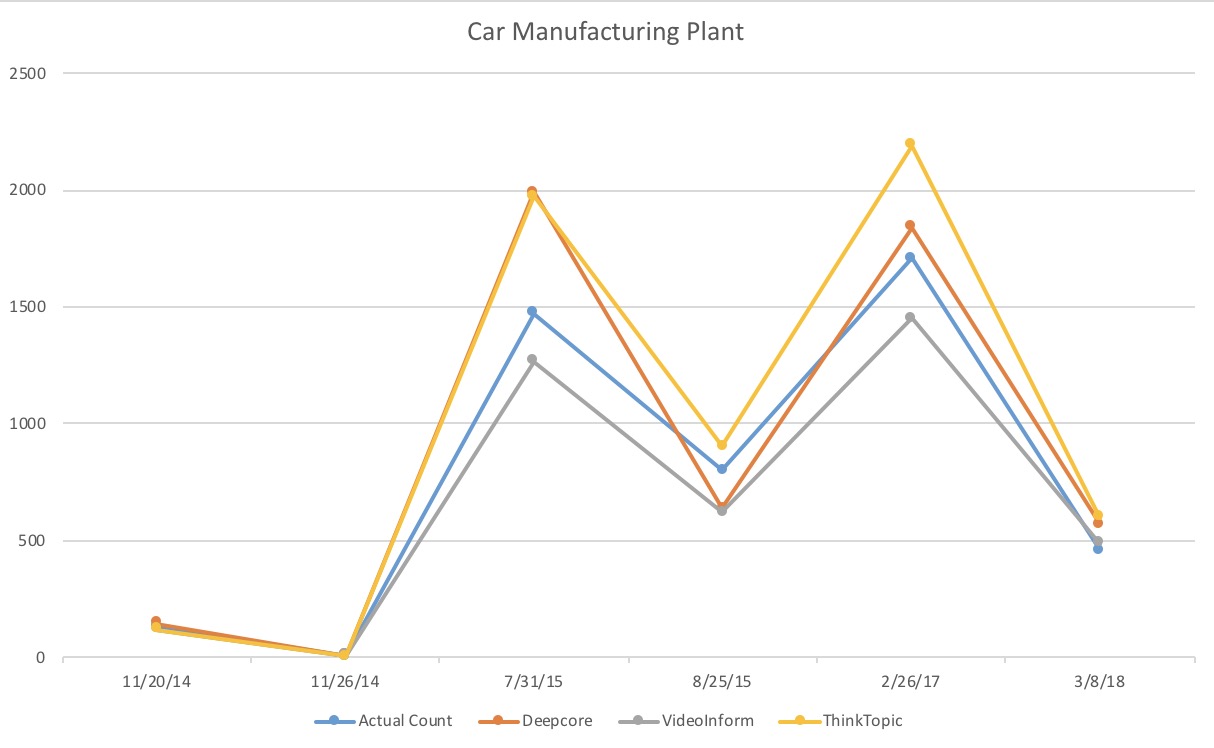

First, we looked at parking lot inventories. We chose a particular car manufacturing plant in Montgomery, Alabama to view, one with nice white outlines where they park the cars, making it easier to count manually in rows to check the model results.

For counting vehicles, we have a number of providers available: DeepCore Machine Learning created by our colleagues at Radiant Solutions (our sibling Maxar Technologies company), Video Inform and ThinkTopic. The image below shows the output of the DeepCore model.

Each model performed fairly well and followed the trend of the ground truth results. Also, as you can see from this very limited sample, no model is consistently better or worse than the others. To achieve the best results you need to use more than one model. Selecting just one to run is hard. You have to factor in things like off-nadir angles and sun angles depending on when and where each model has been trained, based on the image you’re about to process.

Tracking Port Activity

To understand shipping activity, we sought to extract the number of ships in port over time. We decided to observe a busy location that was geographically relevant, with large cargo ships and tankers using the CrowdAI model. We selected the Bandar Abbas port on the coast of Iran.

The outputs of the CrowdAI model are better than the graph leads you to believe. We counted all vessels, but the model was only trained on ships larger than 5m. We needed to take a closer look at the counts we came up with for ground truth to get an accurate view of how well the model performed.

Detecting Multiple Objects

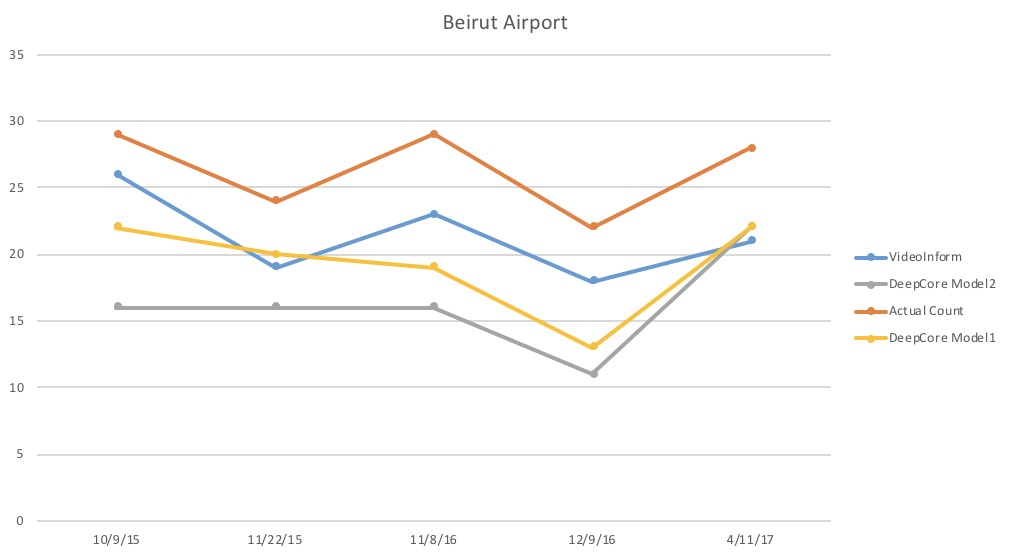

Our next idea was to look at a location with multiple objects we could detect. We selected Beirut, and focused on the Beirut-Rafic Hariri International Airport and Port.

The image below is the output of the Video Inform model.

Our colleagues on the Deep Core team at Radiant Solutions have been amassing a number of machine learning models for object detection that continue to grow and get better over time. For this example, we ran two models to see which was better.

The image below highlights the results from the DeepCore model 1.

We ran the CrowdAI ship detector focused on the port.

Again, for this exercise we counted all vessels, but the model was only trained on ships that were larger than 5m. We needed to take a closer look to get an accurate assessment of how well the model performed.

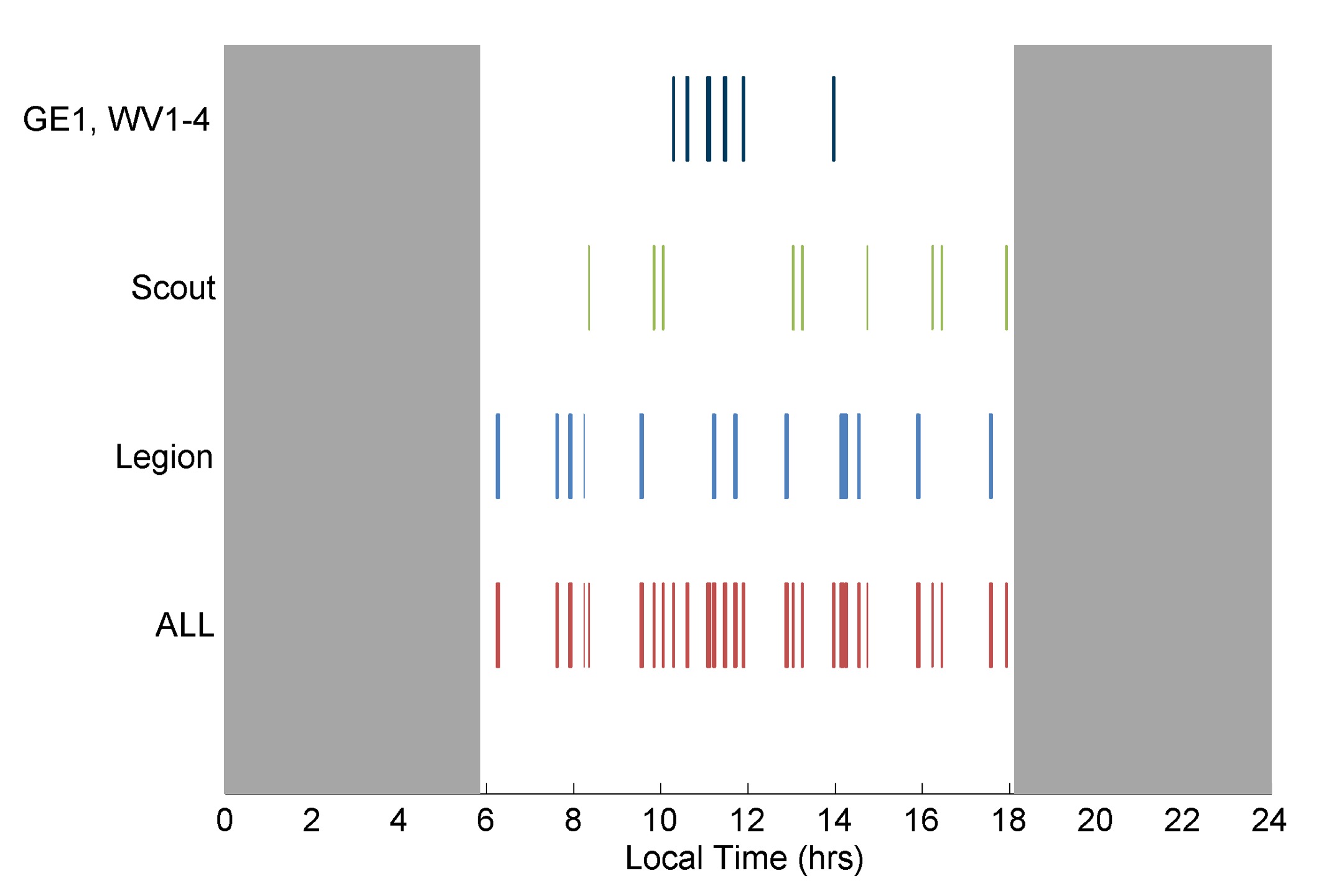

How did we accomplish all of this?We used AnswerFactory, a powerful tool developed by the GBDX team that enables users to select an area of interest and run object detection or feature extraction processing on satellite images.While AnswerFactory is fully automated, we manually selected the images to feed into the machine learning model. To find what we needed, we visited discover.digitalglobe.com and looked for images of our AOI with the lowest possible cloud cover, and entered the image IDs into AnswerFactory. Once done, we set up the projects, drawing AOIs and selecting which models to run for each project. Processing for these projects occurred in the background in a matter of hours. We received an email notification when they were complete, and reviewed the results using the tagging feature, denoting each as “thumbs-up” or “thumbs-down” to easily reference the good and bad detections. This gives us the ability to easily filter the results for fast counting.What do we need before this approach can solve real-time global monitoring problems? For starters, the models could be improved. In Trajectory Magazine’s article The Algorithm Age, NGA Program Manager Brian Bates says the algorithms NGA acquired from GBDX have an accuracy rate of approximately 70 percent. That is “pretty good” he says, “but for government work we need it to be a lot more authoritative than that.”How can we make them better? It starts with a plan for scaling out the validation and verification as our colleagues at Radiant Solutions wrote about. Then, we grow our DigitalGlobe constellation to provide intra-day revisits of the places of interest. The chart below references our current capacity for collection times on a location and builds up to our future plans to incorporate true monitoring capabilities throughout the day.

Dr. Walter Scott, DigitalGlobe Founder and Maxar CTO, said that when our next-generation WorldView Legion constellation and Scout small satellite constellations are in service, we’ll be able to revisit the most rapidly changing areas on Earth as frequently as every 20 to 30 minutes, from sunup to sundown. This will enable entirely new use cases and near real-time change detection.Having the frameworks and systems in place to handle all of this data in an automated fashion will be critical to providing insights in a timely manner, so that this doesn’t become an overwhelming problem of having too many images to review.