Earthcube develops artificial intelligence (AI) solutions based on an automated analysis of geospatial intelligence (GEOINT) workflows. By combining state-of-the-art techniques in both machine learning (ML) and computer vision, Earthcube aggregates information from multiple sources, such as satellite imagery and open source data feeds, to provide its customers with strategic knowledge regarding critical sites of interest, enabling faster interventions for a safer environment. Earthcube helps customers provide security to people and infrastructure and follow the evolution of events happening on Earth.

To achieve its imagery intelligence mission, Earthcube relies on commercial, high-resolution satellite imagery providers. Originally, acquiring large satellite images was quite painful because we manually pulled the files via FTP sites. In addition, it was too expensive to buy enough imagery for ML purposes. But thanks to Maxar's SecureWatch platform and associated API, we can now automate and rationalize the process to enable state-of-the-art AI developments.

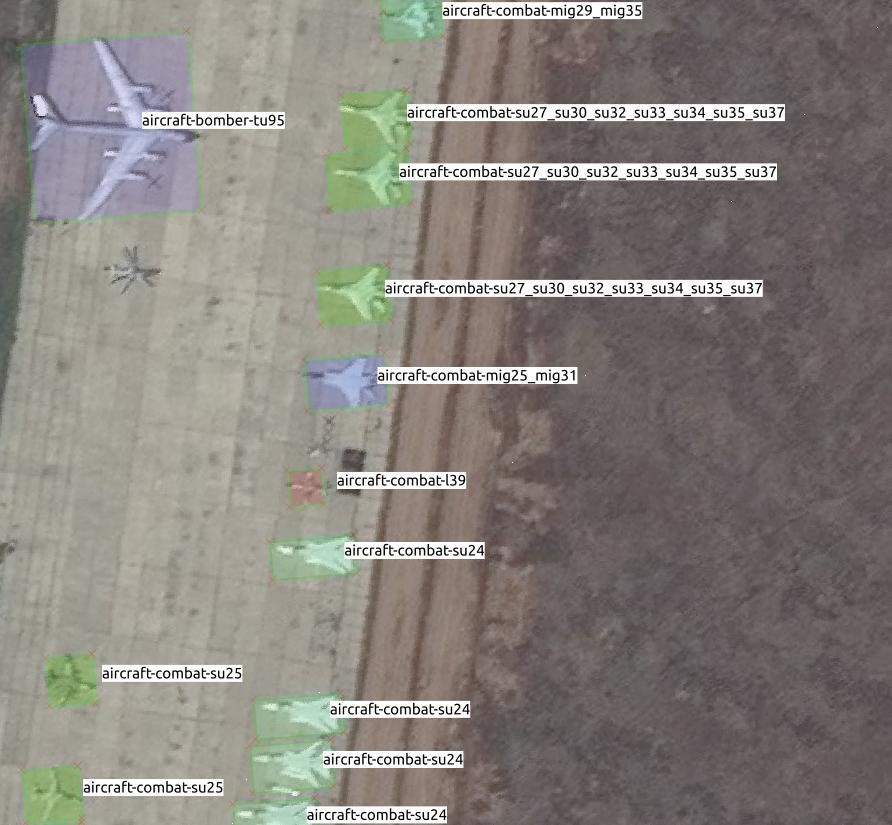

We selected SecureWatch because it gives us on-demand access to Maxar’s entire 110 petabyte library of high-resolution imagery. Using the API and browser interface, we are able to stream and download image data and metadata covering the exact areas we need, directly into our analytical environment. Because SecureWatch gives us access to the highest resolution commercial satellite imagery (as fine as 30cm Ground Sample Distance), our algorithms can be trained to perform the most demanding tasks such as identifying aircraft by type.

Here’s how, at Earthcube, we integrate SecureWatch into our workflows!

SecureWatch API

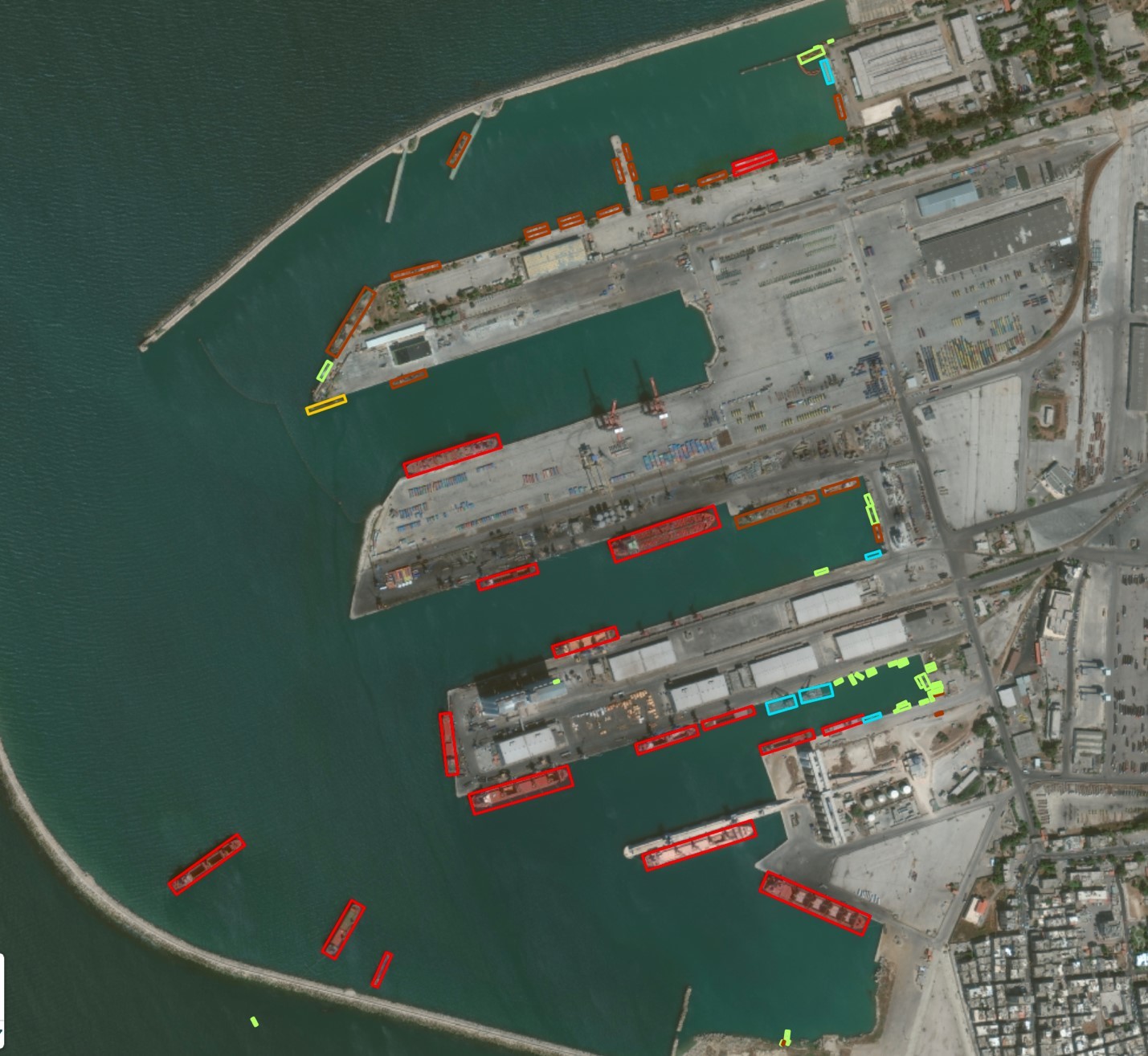

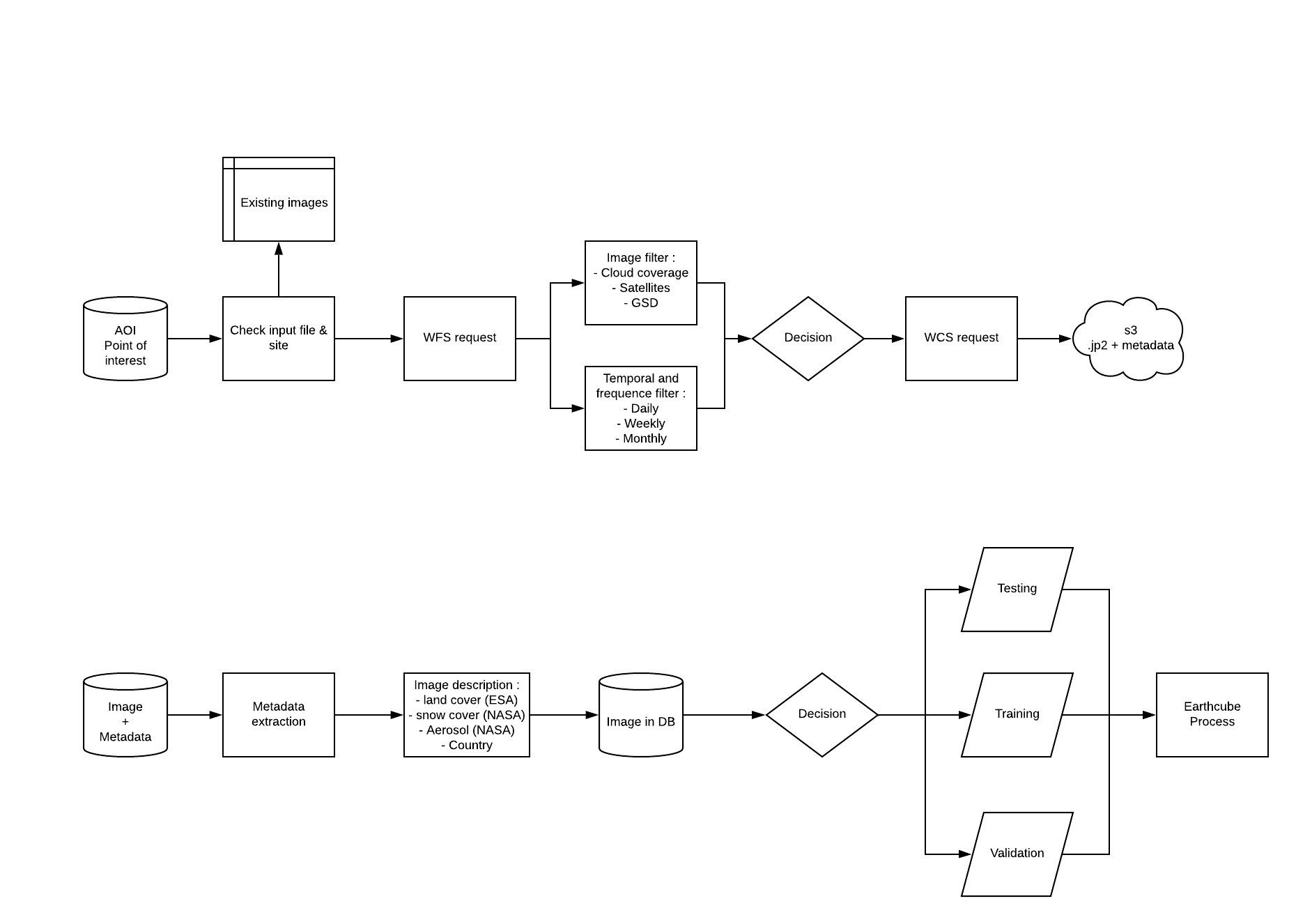

We use satellite imagery to train our object detection algorithms to look for specific items like planes, boats and vehicles. We get this valuable data into our analytics environment by leveraging Maxar’s SecureWatch API. With a simple call to the API, we can launch a Web Feature Service (WFS) protocol with the desired location coordinates and obtain a list of Maxar images available over that site. We also receive the associated metadata for each image. When we have this list, a simple check enables us to automatically verify if any of the images are already in our database and remove them to avoid duplicates.

Next, we apply a first round of filters including cloud cover, spatial resolution and angle of the satellite when it collected the image. By using these filters, only the images that fit these criteria and correspond to our use case will remain available. We further shorten the list of images by choosing the frequency of images needed for training the algorithm. Options include daily, weekly, monthly, bi-monthly, etc.—it is up to our Geospatial Information System team to decide.

Once we have narrowed our image choices, we launch a Web Coverage Service request to download the selected images, and they are stored in Earthcube’s proprietary database.

Metadata extraction

While the images over our specific areas of interest (AOI) are important, they also contain metadata, which are essential for creating relevant datasets. Metadata are a set of geographical indicators that help you decide if your image is the right quality for your algorithm’s training. For testing, it is mandatory to assess genericity and provide an equilibrium in terms of conditions/landscape and quality to have relevant numbers as results.

Maxar metadata contains information about which satellite collected the image and what angle it was at during collection, the sun’s angle and the image’s resolution, cloud cover percentage and collection date and time. We also add additional information from open sources, primarily NASA or ESA, such as land cover, weather conditions, land use, aerosols or even snow coverage.

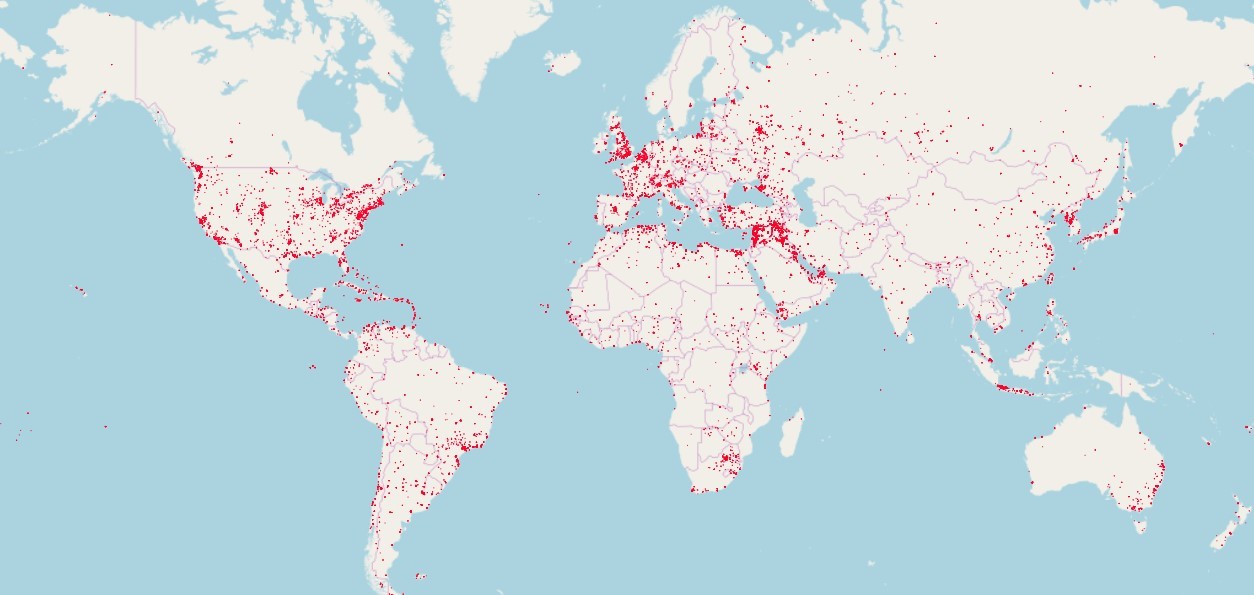

We then repeat this imagery and metadata process over multiple AOIs to satisfy the global needs of our defense customers.

Dataset generation

Now that we have our database set up with imagery and associated metadata, we begin the process of generating datasets. We divide the data into two categories: images for labeling to train the algorithms and images that test and validate the performances of our algorithms. Once these algorithms are trained, we can implement them to provide our customers with the valuable intelligence needed to make mission-critical decisions.

In summary, SecureWatch’s streaming capabilities enables us to access thousands of AOIs around the world, select only the ones that are relevant to our customers needs and download only the specific area we need, preventing us from having to download entire scenes.

Moreover, thanks to Maxar’s powerful API, all queries are now automatized, allowing Earthcube’s data science team to boost the productivity of our dataset generation process by a factor of 10 and considerably increase the relevance of our datasets. These workflow benefits then apply to the performances of our algorithms, which now exceed 95% accuracy and enable accurate and completely automated object classification.